Dear Reader,

Last month, Apple unveiled two new hotly anticipated versions of the iPhone: the iPhone 8 and iPhone X.

In case you missed it, during the demonstration of one of the iPhone X’s boldest new features — facial recognition software — Senior Vice President of Software Engineering Craig Federighi was unable to get it to work.

Here’s Senior Vice President of Worldwide Marketing at Apple Philip Schiller introducing the new model:

Photo courtesy of The Independent (JOSH EDELSON/AFP/Getty Images)

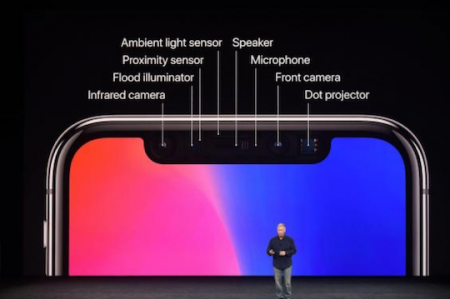

As you can see, there are several hidden sensors and outputs inside the notch at the top of the screen.

Whenever you look at your phone, the “flood illuminator” spots your face and alerts the phone that something is happening. The various sensors activate and begin a number of processes like taking an infrared photo and projecting a network of dots that arrange themselves over your face to be analyzed by the built-in camera. The data are processed in the iPhone X’s new chip, which compares them to the saved information about your face.

In other words, this feature is designed so that users simply have to look at their phone to unlock it. But when Federighi attempted to unlock his iPhone, the software on the phone failed to recognize him and the passcode numbers appeared instead.

How embarrassing.

According to Apple, the Face ID technology didn’t work because other employees had set up the phone for Federighi and in doing so had inadvertently tried to unlock it. In other words, the phone registered anyone who picked it up prior to the demo as someone who was trying to unlock it.

Just like with the Touch ID that debuted on the iPhone 5S, the facial recognition software is trained to limit the number of unlocking attempts — and after too many tries, it will shut itself off, forcing the user to input a passcode as a security feature.

Despite this initial hiccup, I have no doubt using facial recognition software to identify people will soon become a security standard on many devices. However, there are some big risks that can’t be ignored — and should be addressed before facial recognition becomes commonplace.

Risks like…

Lack of Accuracy

As demonstrated by Apple, this software is not always effective. In fact, another example of its failure is when the FBI tried to use it to track down the Boston Marathon bombers. After authorities discovered security footage of the suspects, they ran the images through facial recognition software of their own but couldn’t get a match.

Also, according to an article on Recode, facial recognition software has a harder time reading darker skin tones. In a panel discussion at the World Economic Forum Annual Meeting in January, MIT Media Lab director Joichi Ito said this issue “likely stems from the fact that most engineers are white” — and so are the faces they use to train the software.

That being said, this technology is rapidly improving, so it’s only a matter of time before security camera footage and facial recognition software improve their accuracy and effectiveness. But until then, it’s highly possible that innocent people will be misidentified and criminals will walk free.

Invasion of Privacy

In recent years, we have seen a swift increase in the number of police officers wearing body cameras in cities across the U.S. Some states, such as Nevada, are mandating that all police officers in the state must be equipped with body cameras by next year.

The thing is there are companies working on real-time facial recognition software, which means a police officer walking down the street scanning the faces of passersby could instantly acquire information on them. Of course, this could become a very effective tool for catching criminals, but it also leads to some concerns about preserving our freedoms.

Last year, The Atlantic published a piece detailing legal issues with biometric technology. Namely that judges can sign warrants forcing suspects to unlock their smartphones with their fingerprints — something they cannot do with passcodes.

With facial recognition software, police simply have to hold your phone up to your face and the software will start to work its magic, whether you’ve given permission for police to search your phone or not. Apple boasts that this kind of technology makes their phones more secure, but frankly, I don’t trust it.

It Can Be Defeated

When any new security technology emerges, hackers begin looking for ways to bypass or crack it. In fact, researchers testing their own product were able to fake out the facial recognition software by using pictures.

Basically, they scoured social media for photographs of individuals and used them to trick the facial recognition software into thinking it was looking at the actual person. Sure, the experiment was only 55–85% effective — but that’s an alarming success rate when you’re talking about circumventing security measures.

Here’s something else to consider: What if you grow a beard? Or shave it off? What if you get a haircut or wear different colored contacts? And what happens as you age?

Something tells me there are still some issues engineers have to work out before this software is as efficient and foolproof as Apple would have consumers believe.

A Disguise Won’t Do the Trick

In the spy world, being able to disguise yourself is one of the most critical skills you learn to avoid detection by targets, enemy agents and foreign governments so you can move around and complete your mission safely.

Whether it’s a hat, scarf, sunglasses — even facial hair — these items can disguise a person enough to make facial recognition difficult. But as the accuracy of facial recognition software improves, it may reach a point where even people with disguises can still be identified.

The scary thing is that right now, the accuracy rate of facial recognition software is roughly 85%, and government databases contain pictures of about 50% of the U.S. population. (This photo bank is mostly comprised of photos from driver’s licenses and other government-issued IDs.)

With few regulations governing the use of this type of software, we should all be concerned about our personal privacy.

Stay safe,

Jason Hanson